Explainable AI: Enhancing Trust, Performance, and Regulatory Compliance

8 minutes

December 12, 2024

Artificial Intelligence (AI) is rapidly transforming industries from healthcare and finance to autonomous systems and legal frameworks. However, as AI models become increasingly complex, they often become black boxes, providing robust predictions without explicit reasoning. This lack of transparency raises critical concerns around accountability, fairness, and trust.

Explainable AI (XAI) is a framework that enables the understanding, auditing, and trust in AI decisions. Also known as AI explainability or interpretable machine learning, it empowers stakeholders to answer questions like: Why did the model make this decision? and What data or features influenced the outcome?

In this blog, we break down the meaning of explainability in AI, why it's essential for regulated industries and high-risk applications, and how organizations can balance model interpretability with predictive performance. From credit scoring systems to cancer diagnosis tools, real-world use cases reveal the growing importance of transparent and responsible AI systems.

What is Explainable AI (XAI)?

Explainable AI (XAI) is not just a technical feature; it’s a design principle that addresses one of the most urgent challenges in modern AI systems: trust without transparency.

At its core, explainability refers to the ability to trace, interpret, and communicate how an AI model arrives at a particular output. In contrast to black-box models, where only the input and output are visible, explainable AI (XAI) exposes the inner logic of a system, making it easier for stakeholders to verify, validate, and intervene.

True explainability goes beyond visualizations or post-hoc approximations. It requires clarity on four interlocking layers:

- Prediction-Level Insight: What features drove this prediction? Why did the model favor one outcome over another?

- Model Behavior: How is the model structured? What patterns has it learned from the data, and can those be audited?

- Data Transparency: What training data was used, and how might it reflect bias, imbalance, or noise?

- Human Control Points: What levers are available to govern or override model behavior if it deviates from acceptable outcomes?

In regulated or high-stakes domains, this visibility isn't optional—it’s foundational.

Interpretable machine learning enables not only oversight but also collaboration between human experts and AI systems. The goal isn’t just to understand the model; it’s to make it accountable.

Why is Explainability needed in AI?

As AI systems become embedded in decisions that affect lives, money, and public safety, explainability is no longer optional; it’s a prerequisite for trust, adoption, and compliance.

Even the most accurate machine learning models will struggle to gain stakeholder buy-in if their decision-making processes remain opaque. This is especially true in high-risk and highly regulated industries, such as finance, insurance, and healthcare, where accountability, auditability, and fairness are mandated, not just expected.

While deep learning and ensemble models have achieved state-of-the-art results, their inherent complexity often renders them black boxes—systems that deliver outputs without interpretable reasoning. In contrast, more interpretable models (e.g., decision trees or linear regressions) are easier to understand, but often fall short on performance in complex real-world scenarios.

This trade-off between model performance and interpretability is one of the most urgent challenges in responsible AI development. Organizations that fail to address it face not only technical debt but also regulatory risk, reputational damage, and missed business opportunities.

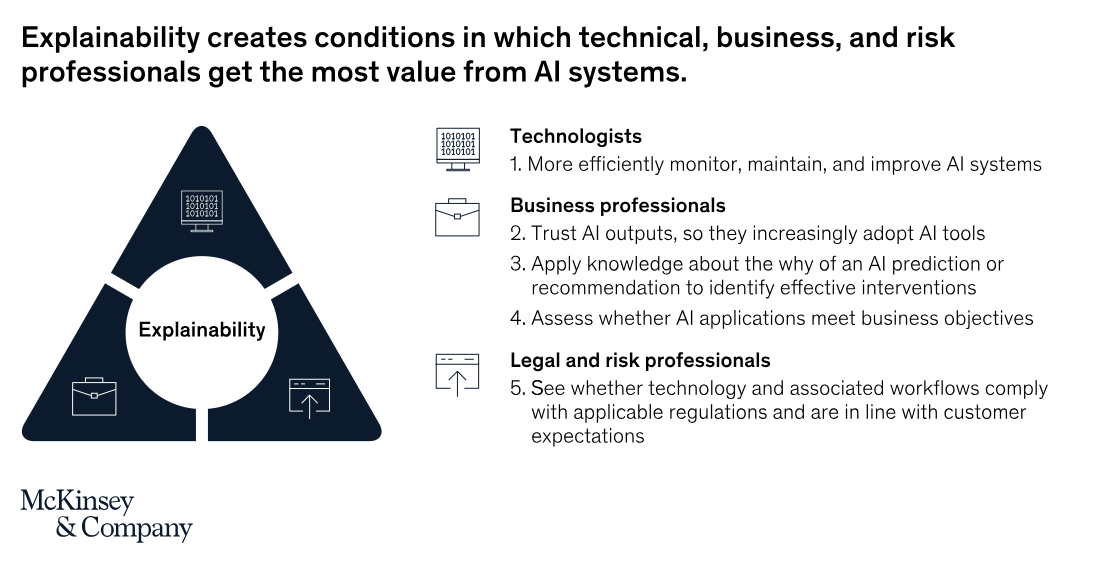

According to McKinsey & Company, organizations that master AI explainability can:

- Accelerate stakeholder trust and internal adoption

- Enhance decision quality and reduce reliance on unverified outputs.

- Strengthen risk management frameworks by identifying systemic bias and failure points

Moreover, in sectors where AI systems must justify outcomes, such as credit scoring, claim approvals, patient triage, and autonomous decision-making, lack of transparency can lead to legal liabilities and customer distrust.

Explainability is what transforms an AI model from a black box into a collaborative decision-making tool - one that regulators, end-users, and domain experts can confidently interrogate and improve.

Source: McKinsey - Why businesses need explainable AI—and how to deliver it

Additionally, due to the self-learning nature of AI and machine learning systems, the underlying rules and decision logic are constantly evolving as these models interact with new data. This dynamic and non-deterministic behavior significantly increases system complexity, making explainability a core requirement for transparent AI systems. As AI models adapt over time, their decision-making becomes harder to interpret - underscoring the need for responsible AI development that prioritizes model interpretability, accountability, and ongoing oversight.

- Model acceptance: Model acceptance is one of the biggest challenges in deploying AI at scale. The black box nature of AI models makes it difficult for users to trust or validate outcomes, as they can see the input and output, but not the decision-making logic in between. This lack of transparency often stalls adoption, especially in regulated industries. Explainable AI (XAI) bridges this gap by revealing how specific inputs influence predictions, translating model behavior into human-understandable terms. By making AI systems more transparent and interpretable, explainability increases stakeholder trust, accelerates adoption, and ensures the model is aligned with user expectations.

- Enhance model performance: Improving AI model performance depends on the ability to understand how a model behaves during training and in production. Deep learning models often operate within a high-dimensional error surface, making them difficult to debug and optimize. The lack of model transparency complicates error diagnosis, slows iteration cycles, and reduces confidence in system outputs. With explainable machine learning, teams can analyze feature impact, uncover model drift, and pinpoint data quality issues, enabling targeted improvements to both model architecture and training pipelines. This not only enhances accuracy and robustness but also shortens time to deployment and boosts reliability in mission-critical environments.

- Investigate correlation: Traditionally ML models operate through opaque processes - you know the input and the output, but there is no explanation as to how the model reached a particular decision. Explainability brings complete transparency in the process, enabling the users to investigate correlations between various factors and determine which factors have the most weightage in the decision.

- Enable advanced controls: Explainable AI (XAI) empowers teams to go beyond passive monitoring and take proactive control of model behavior. With greater transparency in the AI decision-making process, users can supervise predictions, set guardrails, and adjust outputs to meet business rules or ethical standards. This level of AI governance is especially vital in high-risk or regulated environments, where blind automation can lead to unacceptable outcomes.

- Ensuring unbiased predictions: AI models often inherit biases from training data, which can result in unfair or discriminatory outcomes. Without explainability, these biases remain hidden, undermining trust and compliance. XAI techniques make it possible to trace decisions back to their root inputs, enabling teams to detect and address skewed data patterns. This supports bias mitigation in AI and helps build fair and accountable machine learning systems.

- Ensuring compliance with regulations: Global AI regulations—such as the EU AI Act and General Data Protection Regulation (GDPR), increasingly demand transparency, accountability, and explainability in automated decision systems. For high-risk AI applications, providing users with clear explanations is not just best practice - it’s a legal requirement. Explainable AI enables organizations to demonstrate compliance with AI transparency standards, ensure auditable decision flows, and deliver on the GDPR “right to explanation” for algorithmic decisions.

The Risk of AI without Explainability

The lack of explainable AI (XAI) and model interpretability has triggered significant challenges across regulated industries, including operational disruptions, regulatory non-compliance, and growing public and stakeholder mistrust in AI-driven decision-making. As enterprises increasingly rely on complex models like LLMs for high-risk functions, the absence of AI transparency and accountability exposes them to legal liability, reputational risk, and audit failures:

Recruitment Bias: Amazon’s Hiring Tool

Amazon's recruitment tool used machine learning to sort through job applications and identify the most promising candidates. The tool was trained on a dataset of resumes submitted to Amazon over a 10-year period, which predominantly featured male candidates. As a result, the tool learned to prioritize resumes that contained terms or phrases that were more commonly used by men, such as "executed" or "captured". Any ML model is trained to be a generalized query solver. However, because of the high male population in the I.T. Industry as well as the keywords used in their resume, the model was misguided and led to believe that if the gender is Male, the resume is more likely to be selected - purely because of data imbalance and keyword association. This led to the tool discriminating against female candidates, as their resumes were less likely to contain these terms and, therefore, less likely to be selected.

Autonomous Vehicles: Tesla and Uber Incidents

On March 18, 2018, a self-driving Uber test vehicle fatally struck a pedestrian in Arizona. The AI failed to identify her as a pedestrian or predict her movements, exposing critical gaps in decision-making. Similarly, Tesla is facing U.S. federal probes into its "Full Self-Driving" system following multiple crashes, including a fatality, raising concerns about its reliability and safety.

Financial Industry Failures: Credit Scoring Systems issue with Equifax

In 2017, credit reporting agency Equifax had an issue with its systems that led to inaccurate credit scores for millions of consumers. The discrepancies, in some cases exceeding 25 points, impacted approximately 300,000 individuals significantly. These inaccuracies potentially led to wrongful denials of credit for certain borrowers, raising concerns about the integrity of the scoring process.

While Equifax did not directly attribute the problem to its AI systems, there has been speculation that the company's use of AI in credit score calculation may have contributed to the errors.

Healthcare Misdiagnosis: IBM Watson for Oncology

IBM Watson for Oncology was designed to assist in cancer treatment recommendations. However, it produced incorrect or unsafe recommendations in some cases. Healthcare professionals found it challenging to trust the system due to the lack of clear explanations for its decisions. The project faced backlash, with hospitals eventually discontinuing its use in some cases.

Use Cases of Explainable AI (XAI)?

In mission-critical domains, XAI enables clearer decisions, stronger accountability, and improved trust across the AI lifecycle.

Benefits of XAI in Healthcare

In clinical decision support and diagnostics, explainable AI provides visibility into how patient data, test results, and symptoms contribute to medical outcomes. This enhances clinician trust, reduces liability, and ensures regulatory compliance in healthcare AI systems. In pharmaceutical R&D, XAI can improve transparency in drug candidate selection, helping researchers validate why certain molecules progress during drug discovery pipelines.

Benefits of XAI in Financial Services

From credit scoring to fraud detection, explainable AI(XAI) ensures model fairness and auditability. By making algorithmic decisions transparent -such as why a loan was denied or a transaction flagged, banks can comply with anti-discrimination laws, improve internal oversight, and build consumer trust in AI-powered financial systems.

Benefits XAI in Legal and Regulatory

AI used in legal analytics and case prediction must be explainable by design. XAI ensures clarity in how precedents, statutes, and judicial patterns are applied - enabling ethical, traceable, and compliant AI deployments within law firms and regulatory agencies.

Benefits of XAI in Autonomous Systems

In self-driving vehicles and robotics, explainability helps decode why a system made a decision - like misclassifying a stop sign or misinterpreting pedestrian movement. This is critical for post-incident reviews, improving AI safety and accountability, and enhancing public trust in autonomous technologies.

Conclusion

Explainable AI (XAI) is no longer just good to have, it is a must have for enterprises.

As AI systems increasingly influence high-stakes decisions in sectors like healthcare, finance, and legal, AI transparency and interpretability are essential to ensure trust, safety, and regulatory compliance. Without clear visibility into how AI/ML models generate outcomes, organizations risk deploying systems that are opaque, biased, or non-compliant, leading to operational failures and reputational damage.

At the heart of Responsible AI or Explainable AI, transparency into the decision making of the AI model empowers businesses to align machine learning performance with ethical principles such as fairness, accountability, and AI governance. It enables stakeholders - including auditors, regulators, and end users, to validate AI-driven outcomes with confidence. By prioritizing interpretability, enterprises can unlock the full potential of AI while safeguarding against unintended consequences.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)

.png)