An Explainable AI and Alignment framework to build trustable and responsible AI.

For researchers, businesses and IT departments who need a powerful yet easy-to-use AI Alignment and Explainability framework, to bring transparency and help build trust with AI systems!

AryaXAI in ML Lifecycle

Gain trust through accurate AI Explainability!

Gain trust by being able to explain your AI Solutions accurately and consistently to any stakeholder.

True-to-model explanations

In addition to well-tested open source XAI algos, AryaXAI uses proprietary XAI algo to provide true-to-model explainability for complex techniques like deep learning.

Provides multiple types of explanations

Different use cases require different types of explanations for different users. Users can use AryaXAI to provide various other types of explanations like similar cases and what-ifs.

Plug and use techniques that work

You can deploy any XAI method on AryaXAI by simply uploading your model and sending the engineered features. The framework knows how to handle various datasets.

Near real-time explainability through API

Based on the complexity of your model, AryaXAI can provide near real-time explanations through API. You can integrate them into downstream systems of your choice.

Decode, Debug & Describe your AI models

AI solutions are evaluated based on performance and their ability to be transparent and explainable to all key stakeholders - Product/Business Owners, Data Scientists, IT, Risk Owners, Regulators and Customers. It is fundamentally required for mission-critical AI use cases and can be the deciding factor for usage.

AryaXAI's explainable AI methods go beyond open source approaches to ensure the models are explained accurately and consistently. And for complex techniques like ‘deep learning’, AryaXAI uses a patent-pending algorithm called ‘backtrace’ to provide multiple explanations.

Similar cases: References as explanations for accurate AI transparency

Training data is the base for model learning. Identifying similar cases from a model point of view can explain why/how the model has functioned for a prediction.

AryaXAI uses model explainability to figure out similar cases between the inference sample and training data to provide a list of similar cases. In-depth analysis is provided for users to map these data similarities, feature importance, and prediction similarities. References as explanations can be a powerful source of evidence in interpreting model behaviour

What-if: Run hypothetical scenarios right from GUI

Validate and build trust in the model by simulating input scenarios. Understand what features need to be changed to obtain desired predictions.

AryaXAI allows users to run multiple ‘What-if’ scenarios directly from GUI. The framework pings the model for response in real-time and shows the predictions to the users. This is not limited to advanced users, even business users can use it without any technical training or skills. Such ‘What-if’ scenarios can provide quick insights into the model to the users.

Track slippages and deliver consistency in production

Track slippages and errors in production by scrutinizing your models for drifts, bias and vulnerabilities.

Provide confidence.

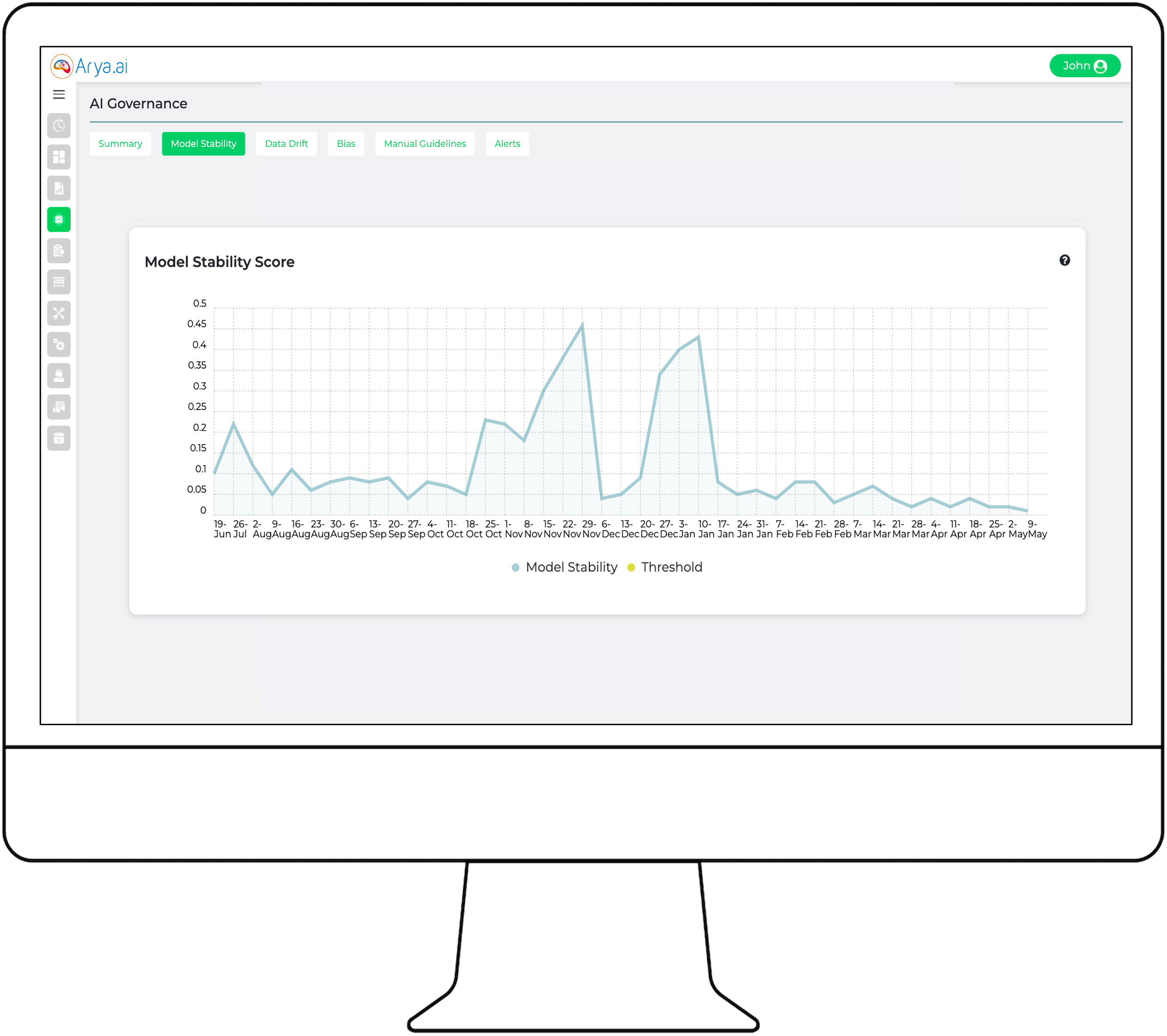

Model Drift Monitoring: Track & identify data issues in production

Deliver consistency to your models in production by preemptively tracking for failure and intervening proactively when required.

Monitor model drift

Model drift can severely impact model performance, one of the common reasons for model failure in production. If not tracked, it will untraceable and slippages will never be corrected.

AryaXAI tracks model drift in real-time and provides the root cause for the model failures. Users can define the drift thresholds and get notified proactively.

Track model drift proactively

Customize the thresholds as per your risk profile

Prevent model failure ahead of time

Define the preferred tracking metrics from the options

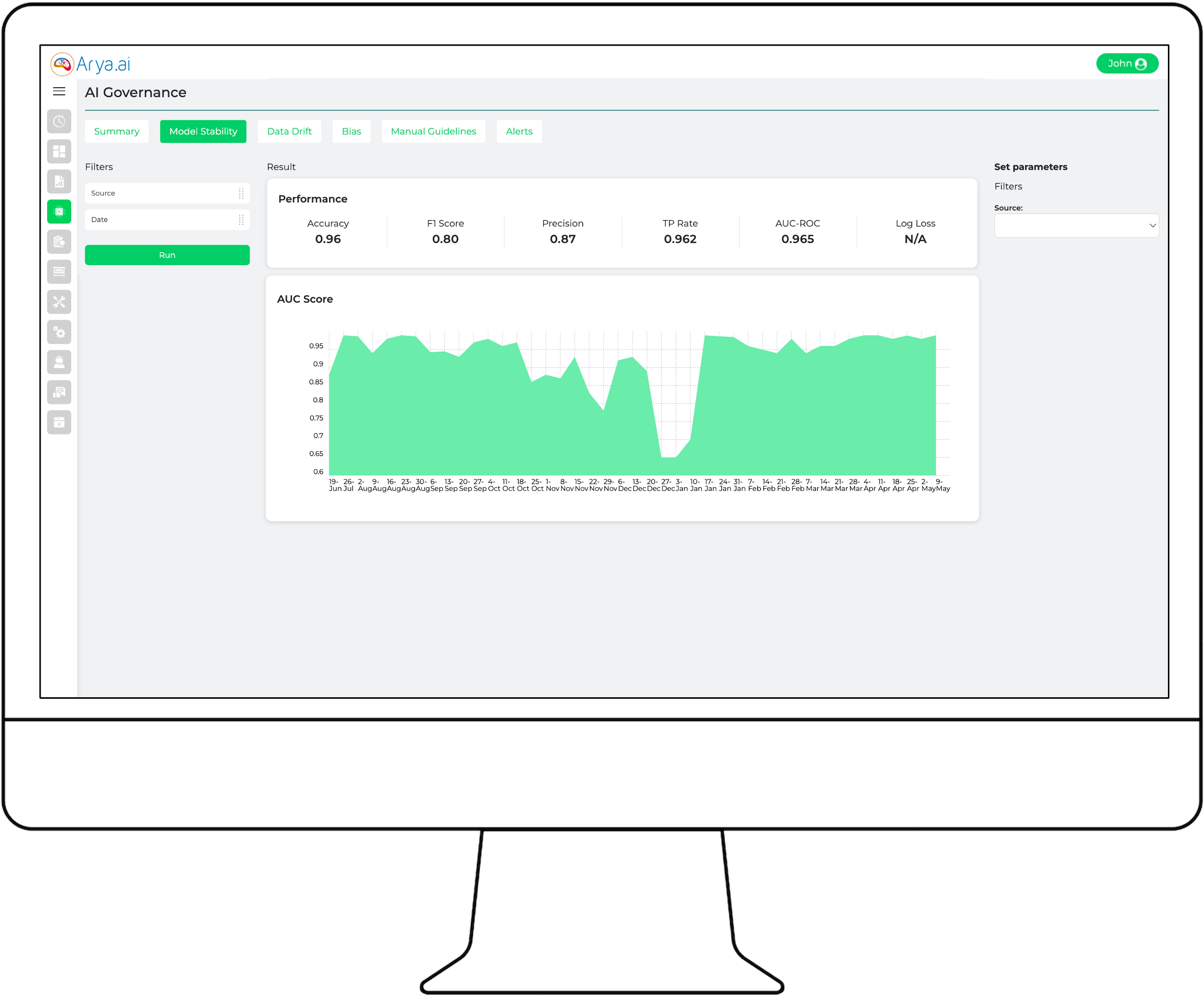

Monitor model performance

Tracking model performance is very tough for use cases where the feedback is coarse or delayed. For mission-critical applications, such delayed feedback can be risky.

In addition, to mapping actual model feedback, AryaXAI can estimate the model performance so that users can assess the model performance preemptively and course-correct the usage.

Track various model performance metrics

Define alerts and get notified about the performance deviation

Ensure consistency in model performance

Slice and dice the sample size and calculate dynamically

Track slippages.

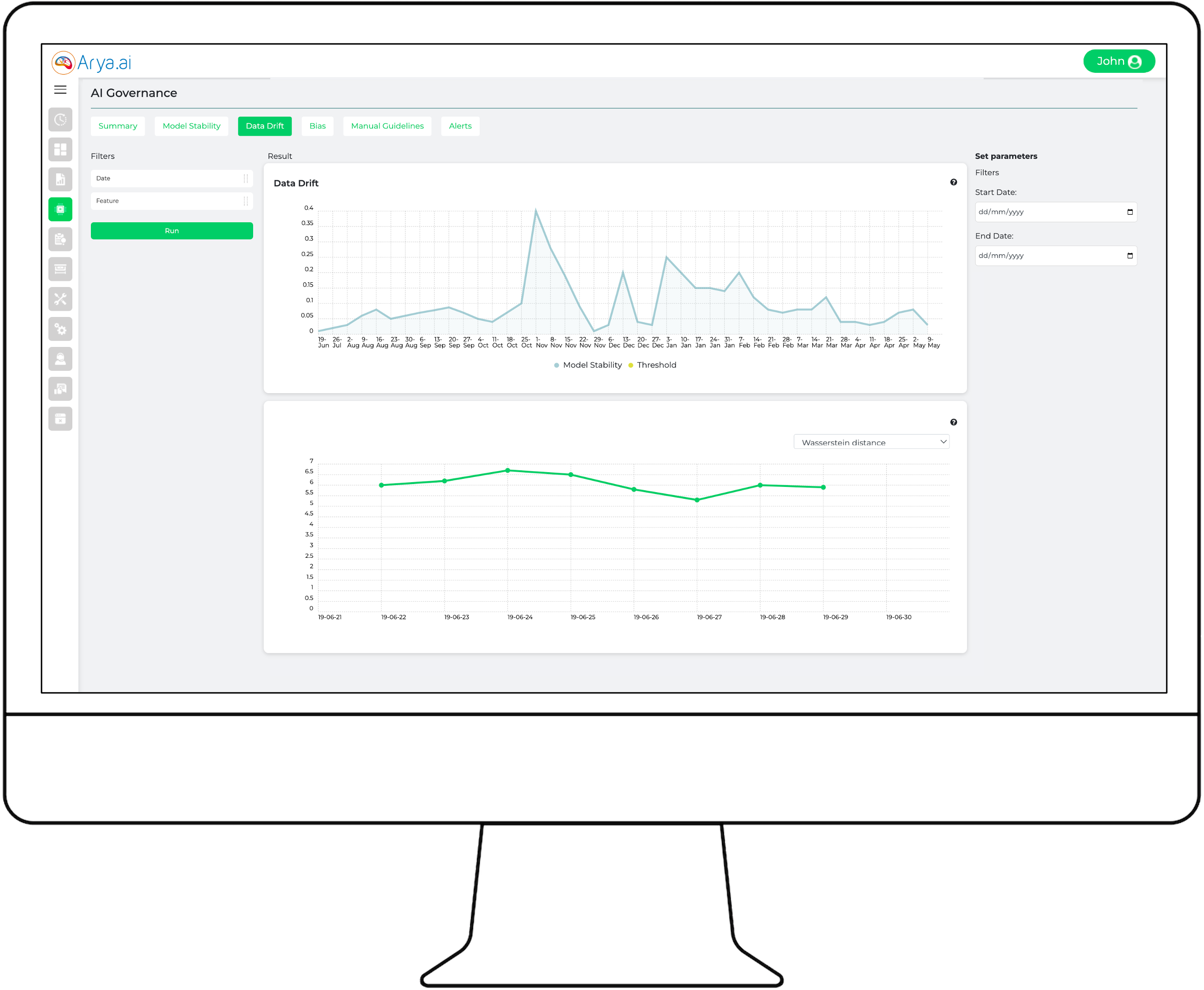

Monitor data drift proactively and prevent decay in model performance

Track how the data drifts in production from simple variations to complex relational changes. Analyze predictions in relation to entire data sets or specific groups

Keep ML models relevant in production

Catch input problems that can negatively impact model performance.

AryaXAI helps monitor even the hard-to-detect performance problems to

prevent your model from growing stale.

Better understand how to begin resolution

With real-time alerts, automatically identify the potential data and performance issues so you can take immediate actions. Easily pinpoint drift across thousands of prediction parameters.

Scalable monitoring in real time

Flag data discrepancies by slicing data into groups

Define the recursive actions for data drift scenarios

Complete visibility into model behaviour across training, test and production

Identify when and how to retrain models

Be fair.

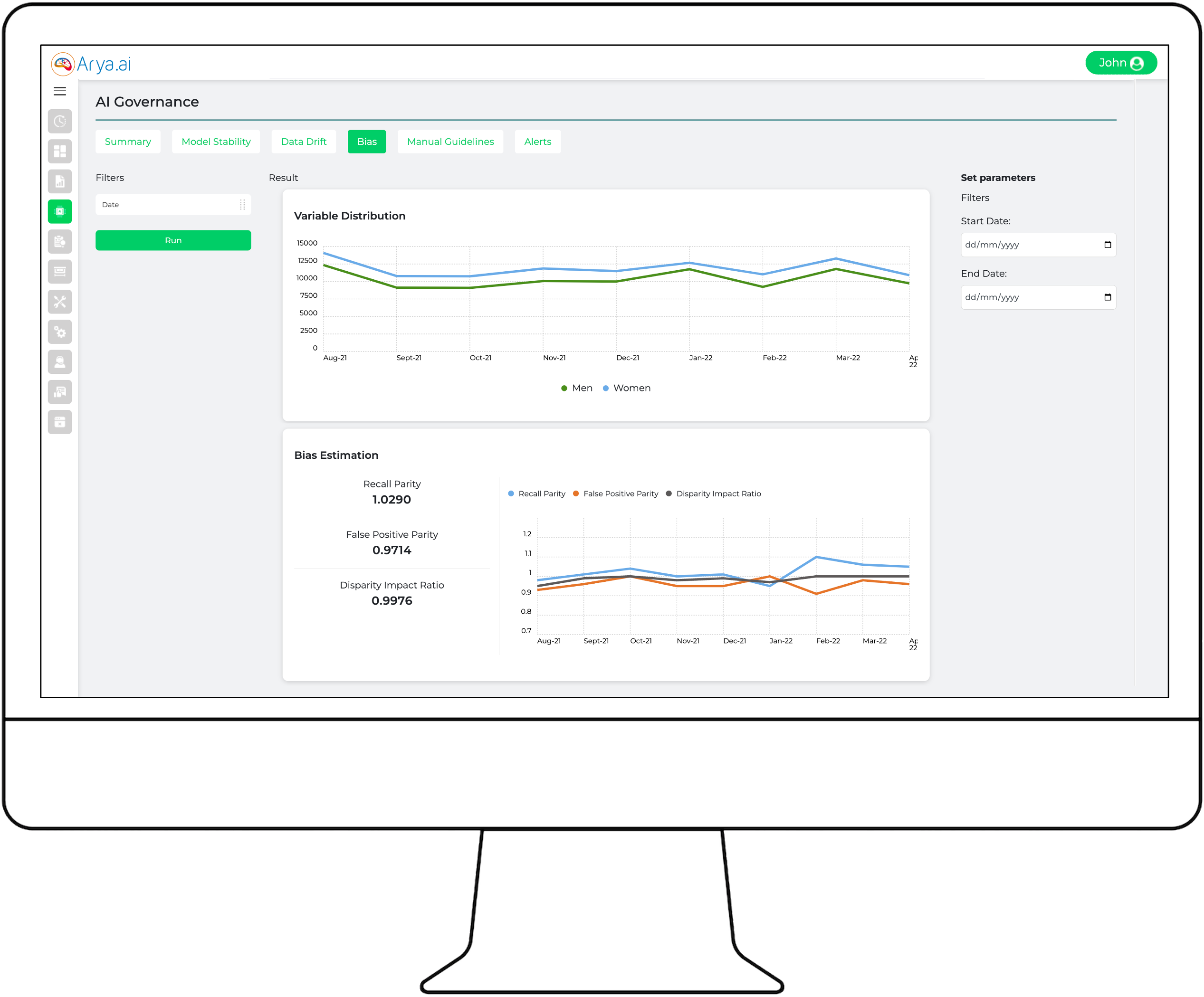

Track and mitigate deep-rooted model bias before it is exposed

Detect, monitor and manage potential risks of bias to stay ahead of regulatory risks.

A more efficient way to debias ML models

AI bias in models can cost organizations heavily. ML models replicate or amplify existing biases, often in ways that are not detected until production

AryaXAI prevents your models from producing unfair outcomes for certain groups

Navigate the trade-off between accuracy and fairness

Mitigate unwanted bias with a window into the working of your ML model. Routinely and meticulously scrutinize data to identify potential biases in the early stages and appropriately correct them.

Bias is real!

Selection bias

Measurement bias

Information bias

Sample bias

Exclusion bias

Algorithm bias

Ensure fairness in predictions

Detect and mitigate AI bias in production

AryaXAI uses multiple tracking metrics to identify bias

Track model outcomes against custom bias metrics

Customize bias monitoring by defining the sensitive pool

Improve model fairness to reduce business risk

Enterprise-grade model audit for accurate AI performance

Enterprise-grade model auditing for organizations that need to get insights into the risk evaluations and have answers in any situations.

Develop safeguard controls

Perform in-depth audit around ML artecrafts

Understand the value of your ML models, uncover business risks and develop safeguard controls to avoid risks. Integrate fairness, explainability, privacy, and security assessments across workflows.

Perform in-depth audit around ML artefacts

For mission-critical use cases, ML auditing ensures systematic execution of processes to identify associated risks and develop safeguard controls to avoid risks. AryaXAI methodically captures various auditable artefacts during training and production.

Organizations can define audit cadences and execute them automatically in AryaXAI. Users can review these reports anytime and anywhere. The key observations can be shared between teams to inform all stakeholders about the gaps and execute the needful corrections. And when it is required to share critical information with regulators or compliance teams, AryaXAI helps aggregate critical information in a jiff.

Data Preparation

Data Selection

- Which data is selected?

- The retionale behind the chice.

- Selection authority

Data Preparation

- Detail about data removal

- Bais mitigation

- Trueness representaition

Feedback Gathering

- Selection of retraining data

- Analysis od errors

- Case-wise true labels

Model Building

Model training

- Technique Selection

Model training

Final model

Model debug

- Validating explainability

- Review of gobal explanations

- Sufficency of explanations

Model Testing

Model Training

Model challenger deifinition

Records of challenger performance

Segmentations/performaces

Test Data

- Sufficiency of test scenarios

- Failure analysis

- Usage risk estimation

- Business risk

Sucess Criteria

- Defining sucess criteria

- Simulated Usage

- Benefits estimation

- Sign off authorization

Model Testing

Case-wise

- Predictions record

Model state

Local explainability

Model consistency

- Event of data drift

- Events of model drift

- Records of biased predictions

- Records of model degradation

Protect from AI uncertainty with advanced AI policy controls

Protect from AI uncertainty by defining policies that reflect the organisational, societal and business preferences.

Simplify management and standardize governance

Preserve business and ethical interests by enforcing policies on AI

Enhance the applicability of the ML model with contextual policy implementation across multiple teams and stakeholders

Administer policy controls on data and model outcome

Easily add/edit/modify policies with AryaXAI. Ensure regulatory governance with customizable controls to maintain and modify policies.

Define responsible requirements across a variety of policies

Enhance the applicability of the ML model with contextual policy implementation across multiple teams and stakeholders.

Centralized repository to fine-tune and manage policies

Enable cross-functional teams to define policy guardrails to ensure continuous risk assessment. Centralized repositories can be used to easily add/ edit/ productionize policies.

Version controls to measure and manage AI governance, risk, and compliance

Streamline monitoring and management of policy changes with version controls. Track and retrace policy changes across roles.