Gain trust by explaining it accurately!

True-to-model explanations

In addition to well-tested open source XAI algos, AryaXAI uses proprietary XAI algo to provide true-to-model explainability for complex techniques like deep learning.

Provides multiple types of explanations

Different use cases require different types of explanations for different users. Users can use AryaXAI to provide various other types of explanations like similar cases and what-ifs.

Plug and use techniques that work

You can deploy any XAI method on AryaXAI by simply uploading your model and sending the engineered features. The framework knows how to handle various datasets.

Near real-time explainability through API

Based on the complexity of your model, AryaXAI can provide near real-time explanations through API. You can integrate them into downstream systems of your choice.

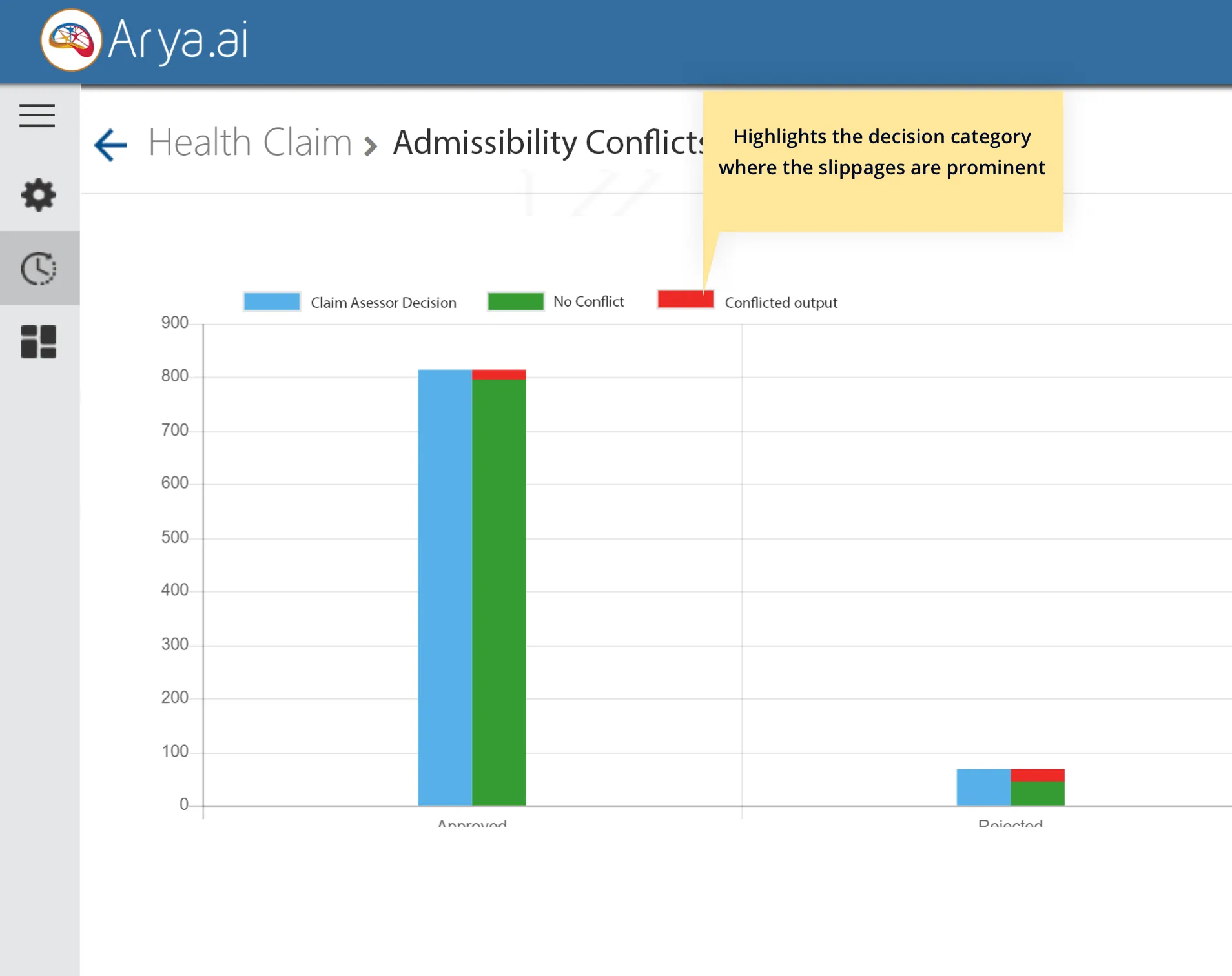

Decode, Debug & Describe your models

AI solutions are evaluated based on performance and their ability to be transparent and explainable to all key stakeholders - Product/Business Owners, Data Scientists, IT, Risk Owners, Regulators and Customers. It is fundamentally required for mission-critical AI use cases and can be the deciding factor for usage.

AryaXAI's explainable AI methods go beyond open source approaches to ensure the models are explained accurately and consistently. And for complex techniques like ‘deep learning’, AryaXAI uses a patent-pending algorithm called ‘backtrace’ to provide multiple explanations.

Similar cases: References as explanations

Training data is the base for model learning. Identifying similar cases from a model point of view can explain why/how the model has functioned for a prediction.

AryaXAI uses model explainability to figure out similar cases between the inference sample and training data to provide a list of similar cases. In-depth analysis is provided for users to map these data similarities, feature importance, and prediction similarities. References as explanations can be a powerful source of evidence in interpreting model behaviour

What-if: Run hypothetical scenarios right from GUI

Validate and build trust in the model by simulating input scenarios. Understand what features need to be changed to obtain desired predictions.

AryaXAI allows users to run multiple ‘What-if’ scenarios directly from GUI. The framework pings the model for response in real-time and shows the predictions to the users. This is not limited to advanced users, even business users can use it without any technical training or skills. Such ‘What-if’ scenarios can provide quick insights into the model to the users.